Share This Article

In the fast-paced world of AI development, we’re constantly pushing the boundaries of what’s possible with large language models. Yet despite all our advances, traditional Retrieval-Augmented Generation (RAG) systems often leave us frustrated when dealing with complex, interconnected information. You’ve probably experienced it yourself—asking about relationships between concepts only to receive technically correct but frustratingly disconnected snippets of information. It’s like having all the puzzle pieces but no way to see how they fit together.

This disconnect isn’t just an annoyance; it’s a fundamental limitation holding back more sophisticated AI applications. When your RAG system can’t trace the connection between a medication and its contraindications across multiple documents, or when it fails to understand how different components in a technical system interact, you’re seeing the ceiling of what vector similarity alone can achieve. What we need isn’t just better search—we need systems that understand relationships the way we do.

Enter GraphRAG, a hybrid approach that’s changing how we think about knowledge retrieval in AI systems. By marrying the semantic search capabilities of vector databases with the relationship-modeling power of graph databases, GraphRAG doesn’t just find relevant information—it understands how that information connects to everything else in your knowledge base. Think of it as upgrading from a library card catalog to having a knowledgeable librarian who not only finds the books you need but also explains how they relate to each other and suggests connections you hadn’t considered.

In this article, we’ll dive into:

- What GraphRAG is and why traditional RAG falls short for complex queries

- The technical architecture combining vector and graph databases

- How the hybrid retrieval process works in practice

- Real-world applications across different industries

- Implementation considerations and optimization strategies

- Performance benchmarking insights from recent research

- Future directions including agentic approaches and advanced integrations

Understanding GraphRAG

What Is GraphRAG?

Let’s start with the basics. GraphRAG is essentially a knowledge retrieval system that combines two powerful approaches: the semantic understanding of vector databases and the relationship awareness of graph databases. If traditional RAG is like having a really good search engine, GraphRAG is like having that search engine plus a mind map that shows how everything connects.

You can think of it this way: when you’re researching a topic, you don’t just collect relevant documents—you build mental connections between ideas, trace cause-and-effect relationships, and understand how different concepts influence each other. That’s exactly what GraphRAG brings to AI systems. It doesn’t just retrieve information; it retrieves context.

Here’s a simple example to illustrate the difference:

# Traditional RAG approach

def traditional_rag_query(query):

# Find semantically similar documents

similar_docs = vector_db.similarity_search(query, k=5)

# Return relevant chunks

return [doc.content for doc in similar_docs]

# GraphRAG approach

def graphrag_query(query):

# Step 1: Find semantically similar content

entry_points = vector_db.similarity_search(query, k=3)

# Step 2: Explore relationships from those entry points

connected_info = []

for doc in entry_points:

# Traverse the knowledge graph to find related entities

relationships = graph_db.traverse_from(doc.entities)

connected_info.extend(relationships)

# Step 3: Combine semantic and relational context

return merge_contexts(entry_points, connected_info)The magic happens in that second step—GraphRAG doesn’t stop at finding similar content. It explores the web of connections radiating out from that content, building a richer, more complete picture of the information landscape.

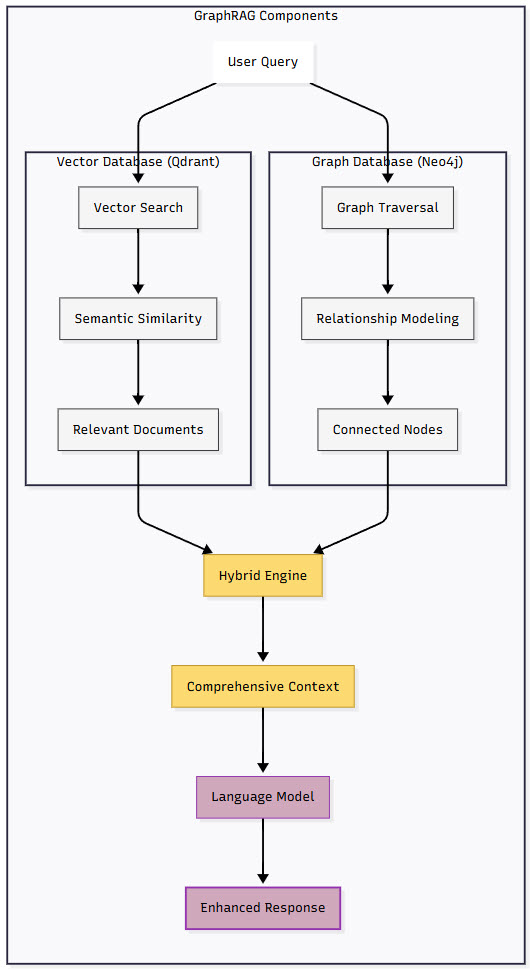

Figure 1: GraphRAG Components Integration

This diagram illustrates how vector and graph databases work in tandem within GraphRAG. The vector database handles semantic similarity searches while the graph database manages relationship modeling. Both feed into a hybrid engine that assembles comprehensive context, demonstrating how GraphRAG achieves both breadth through semantic search and depth through relationship traversal.

Why Traditional RAG Hits a Wall

Now, don’t get me wrong—traditional RAG has been revolutionary for AI applications. It’s given LLMs access to external knowledge bases and dramatically reduced hallucinations. But when you start dealing with complex, real-world queries, its limitations become painfully clear.

The Connection Blindness Problem: Traditional RAG excels at finding documents that talk about similar things, but it’s blind to how those things relate to each other. Imagine you’re investigating how supply chain disruptions affect semiconductor manufacturers. A traditional RAG system might retrieve documents about supply chains and documents about semiconductors, but it won’t understand that a shortage of rare earth metals (mentioned in document A) directly impacts the production capacity of chip fabs (discussed in document B).

Multi-hop Reasoning Barriers: Here’s where things get really tricky. When you ask a question like “What medications should be avoided by patients with kidney disease who are also taking blood thinners?”, you’re asking the system to navigate multiple relationships: medications → kidney interactions, medications → blood thinner interactions, and the intersection of both. Traditional RAG would need multiple separate queries and hope the LLM can piece together the connections. GraphRAG? It follows these relationship chains naturally.

Context Fragmentation: We’ve all seen this—you ask about a complex topic and get back five relevant but disconnected snippets. Each piece makes sense on its own, but the overall picture remains fuzzy. It’s like trying to understand a movie by watching random five-minute clips. GraphRAG maintains the narrative thread by understanding how information flows between documents.

How GraphRAG Changes the Game

By adding relationship awareness to semantic search, GraphRAG fundamentally changes what’s possible in knowledge retrieval. Let me break down how it addresses each of those limitations:

First, it eliminates connection blindness by explicitly modeling relationships. When GraphRAG ingests documents, it doesn’t just create embeddings—it extracts entities and maps out how they connect. So when you search for information, you’re not just finding similar content; you’re discovering the entire network of related concepts.

Second, it enables natural multi-hop reasoning. Graph traversal is literally designed for following chains of connections. Those complex queries that would have traditional RAG systems tied in knots? GraphRAG handles them by simply walking the graph from node to node, following the breadcrumb trail of relationships.

Third, it maintains context coherence by preserving the connective tissue between information fragments. Instead of returning isolated snippets, GraphRAG can trace the narrative threads that connect different pieces of information, giving you not just the facts but the story they tell together.

A research director at a major pharmaceutical company put it perfectly: “Our old RAG system could find every document mentioning a drug compound, but GraphRAG shows us how that compound travels through biological systems, what it interacts with along the way, and what downstream effects we should watch for. It’s the difference between having ingredients and understanding the recipe.”

The Technical Architecture Behind GraphRAG

Building Blocks: Vector and Graph Databases

To really understand how GraphRAG works, we need to look under the hood at its two main engines: vector databases and graph databases. Each brings unique strengths to the table, and it’s their combination that makes GraphRAG so powerful.

Vector Databases: The Semantic Search Engine

Vector databases are the workhorses of semantic search. They transform text (or images, audio, whatever you’re working with) into high-dimensional mathematical representations called embeddings. These embeddings capture the meaning of content in a way that computers can understand and compare.

In GraphRAG implementations, vector databases like Qdrant handle the heavy lifting of semantic similarity. When you submit a query, it gets transformed into an embedding, and the vector database finds other embeddings that are closest to it in the high-dimensional space. It’s remarkably effective—documents about “automobiles” and “cars” end up near each other even though they use different words.

Here’s what makes modern vector databases like Qdrant particularly well-suited for GraphRAG:

- HNSW algorithm: Hierarchical Navigable Small World graphs enable lightning-fast searches even with millions of vectors

- Compression techniques: Advanced compression can improve search performance by up to 40x for high-dimensional vectors

- Flexible filtering: You can combine semantic search with metadata filtering for more precise retrieval

Graph Databases: The Relationship Navigator

While vector databases handle the “what,” graph databases like Neo4j handle the “how”—how things connect, relate, and influence each other. They store information as nodes (entities) connected by edges (relationships), creating a web of knowledge that mirrors how information naturally connects in the real world.

What makes graph databases special for GraphRAG:

- Native relationship storage: Unlike relational databases that struggle with complex joins, graph databases are built for relationships

- Efficient traversal: Following connections is a graph database’s bread and butter—what would take hours in SQL takes seconds in Cypher

- Flexible schema: You can model any type of relationship without restructuring your entire database

To put this in perspective, a five-level relationship traversal (like tracing from a company to its subsidiaries to their suppliers to their environmental impacts to regulatory implications) takes about two seconds in Neo4j. Try that in a traditional database and you might be waiting an hour.

The Power of Combination

Here’s where things get interesting. Vector and graph databases aren’t just coexisting in GraphRAG—they’re actively complementing each other:

| Capability | Vector Database | Graph Database | Combined in GraphRAG |

|---|---|---|---|

| Finding related content | Excellent (semantic similarity) | Limited (exact matches) | Semantic entry points for graph exploration |

| Understanding relationships | Poor (no relationship model) | Excellent (native support) | Rich relationship context for semantic matches |

| Query speed | Very fast for similarity | Fast for traversal | Optimized two-stage retrieval |

| Handling ambiguity | Good (semantic understanding) | Requires precise queries | Semantic queries with precise relationship following |

| Scalability | Highly scalable | Scales with relationship complexity | Balanced approach using vector filtering |

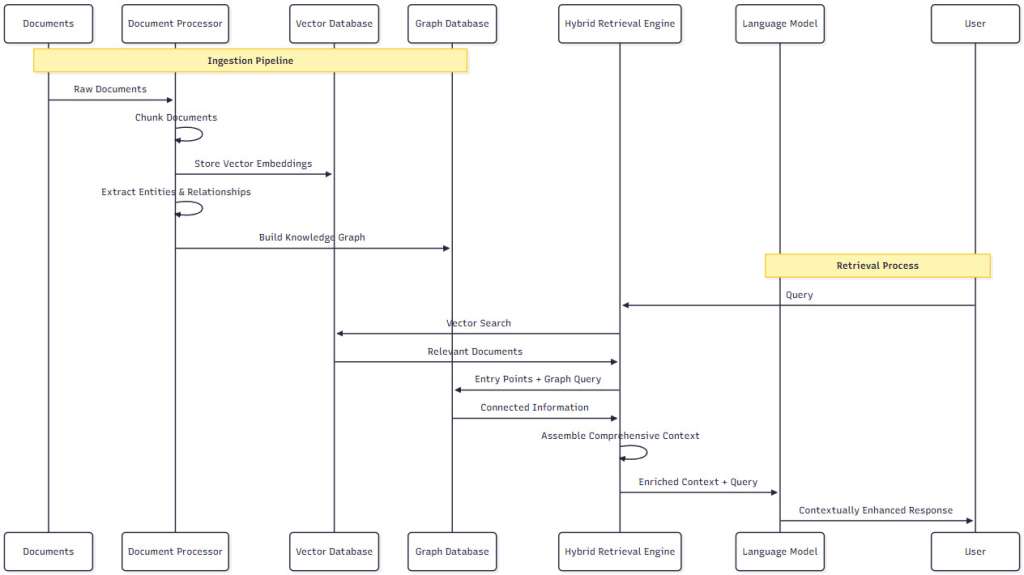

Figure 2: Complete GraphRAG Architecture

This comprehensive diagram shows both the ingestion pipeline (document processing, chunking, embedding, entity extraction) and the retrieval process (query analysis, hybrid search, context assembly). Notice how the system maintains separate but connected pathways for vector and graph operations, converging in the hybrid retrieval engine.

Core Components and Data Flow

GraphRAG’s architecture consists of five key components that work together like a well-oiled machine. Let’s walk through each one:

1. Document Processor: This is where raw documents get transformed into GraphRAG-ready data. It handles chunking (breaking documents into digestible pieces), preprocessing (cleaning and standardizing text), and preparing content for both vector embedding and entity extraction. The key here is maintaining semantic coherence—chunks need to be large enough to preserve context but small enough for efficient processing.

2. Vector Database (typically Qdrant): This stores the embedded representations of document chunks. When a query comes in, this is usually the first stop—finding semantically relevant content that serves as entry points for deeper exploration.

3. Graph Database (typically Neo4j): The relationship headquarters. This stores entities as nodes and their relationships as edges, creating a navigable knowledge network. Each entity is linked back to its source documents, creating a bridge between semantic and relational search.

4. Hybrid Retrieval Engine: The conductor of the orchestra. This component decides how to balance vector and graph search for each query, merges results from both sources, and assembles the final context. It’s smart about when to emphasize semantic similarity versus relationship exploration.

5. LLM Integration Layer: The final piece that takes the enriched context from the hybrid retrieval engine and feeds it to the language model for response generation. This layer handles prompt construction, context windowing, and response formatting.

The Data Journey: From Documents to Knowledge

Let’s trace how information flows through GraphRAG, from initial ingestion to final retrieval. Understanding this journey is crucial for optimizing your implementation.

The Ingestion Pipeline

When you feed documents into GraphRAG, they undergo a sophisticated transformation:

># Simplified GraphRAG ingestion pipeline

class GraphRAGIngestionPipeline:

def __init__(self, vector_db, graph_db, embedder, entity_extractor):

self.vector_db = vector_db

self.graph_db = graph_db

self.embedder = embedder

self.entity_extractor = entity_extractor

def ingest_document(self, document):

# Step 1: Intelligent chunking

chunks = self.semantic_chunking(document)

# Step 2: Create embeddings for vector search

for chunk in chunks:

embedding = self.embedder.embed(chunk.text)

chunk_id = self.vector_db.add(embedding, metadata={

'text': chunk.text,

'doc_id': document.id,

'position': chunk.position

})

# Step 3: Extract entities and relationships

entities, relationships = self.entity_extractor.extract(chunk.text)

# Step 4: Build the knowledge graph

for entity in entities:

node_id = self.graph_db.create_or_get_node(

label=entity.type,

properties={'name': entity.name, 'chunk_id': chunk_id}

)

# Link entity to document

self.graph_db.create_edge(

source=node_id,

target=document.id,

relationship='APPEARS_IN'

)

# Step 5: Create inter-entity relationships

for rel in relationships:

self.graph_db.create_edge(

source=rel.source,

target=rel.target,

relationship=rel.type,

properties=rel.attributes

)

def semantic_chunking(self, document):

"""Chunk documents while preserving semantic coherence"""

# Implementation varies but focuses on maintaining

# meaningful context boundaries

passThe beauty of this dual approach is that each piece of information gets stored in the format that best suits its retrieval. Semantic meaning goes into vectors, relationships go into the graph, and cross-references tie everything together.

The Retrieval Process

When a query arrives, GraphRAG orchestrates a sophisticated retrieval dance:

Query Analysis: First, the system analyzes the query to understand what type of information is needed. Is this primarily a semantic search? Does it require relationship traversal? Most often, it’s both.

Vector Search Phase: The query gets embedded and sent to the vector database, which returns the most semantically similar chunks. These aren’t just results—they’re launching points for graph exploration.

Graph Traversal Phase: Using entities found in the vector search results, GraphRAG explores the knowledge graph. It might traverse relationships like “manufactured_by,” “treats_condition,” or “regulates_industry,” building a network of relevant connections.

Context Assembly: Here’s where the magic happens. The system merges semantic matches with relationship discoveries, creating a rich context that captures both what’s relevant and how it all connects.

Response Generation: Finally, this enriched context gets passed to the LLM, which can now generate responses informed by both semantic relevance and relationship awareness.

Figure 3: GraphRAG Process Flow

This sequence diagram illustrates the complete flow from document ingestion through to user query response. The process clearly separates the offline ingestion pipeline from the online retrieval process, showing how both vector and graph databases contribute to the final contextually enhanced response.

Practical Applications of GraphRAG

Knowledge Management and Research

In research environments, GraphRAG is transforming how we navigate complex knowledge landscapes. Let me share some compelling examples:

Medical Research: Imagine you’re researching drug interactions for patients with multiple conditions. Traditional RAG might find documents about each drug and condition separately. GraphRAG? It traces the web of interactions: Drug A → affects liver enzyme CYP3A4 → which metabolizes Drug B → potentially causing toxic buildup → especially risky in patients with Condition C. It’s like having a research assistant who not only finds relevant papers but also connects the dots between them.

Legal Analysis: Law firms are using GraphRAG to navigate the complex web of precedents, statutes, and case law. When researching a novel legal question, the system can trace how different cases have interpreted similar statutes, how those interpretations have evolved over time, and what related legal principles might apply. One legal tech director told me, “It’s like having a senior partner’s ability to see connections between cases, but at the speed of software.”

Scientific Literature Review: Researchers conducting systematic reviews can use GraphRAG to map the landscape of scientific knowledge. The system doesn’t just find papers on a topic—it reveals research lineages, conflicting findings, methodological relationships, and emerging trends that might take months to discover manually.

Customer Support and Documentation

Technical support is another area where GraphRAG shines by understanding how different parts of complex systems interact:

Intelligent Troubleshooting: When a customer reports an issue, GraphRAG can navigate through product documentation, understanding how different components interact. If a user says “the API returns a 403 error when I try to update user profiles,” GraphRAG can trace: API endpoint → authentication system → permission model → user role configuration → common misconfigurations. It provides not just the relevant docs but the troubleshooting path.

Documentation Navigation: For complex technical products, GraphRAG enables natural language queries that span multiple documentation sections. Users can ask questions like “How do I set up monitoring for my deployed models?” and get answers that pull from deployment guides, monitoring documentation, and best practices—all properly connected and contextualized.

Financial Analysis and Risk Assessment

The financial sector is finding GraphRAG particularly valuable for understanding complex market relationships:

Investment Analysis: Analysts use GraphRAG to understand how companies, sectors, and economic indicators interconnect. When evaluating an investment, the system can trace relationships like: Company A → supplies critical components to → Company B → which dominates market segment X → affected by regulation Y → influenced by economic indicator Z. It’s connecting dots that might take analysts days to piece together.

Fraud Detection: By modeling transactions, entities, and relationships in a graph while using vector search for pattern matching, financial institutions can identify complex fraud schemes. The system can spot unusual patterns that span multiple accounts, timeframes, and transaction types—connections that would be nearly impossible to find with traditional methods.

Integration with Modern AI Systems

The Role in AI Architecture

GraphRAG isn’t meant to replace your existing AI infrastructure—it’s designed to enhance it. Think of it as adding a knowledge layer that sits between your data sources and your AI models, enriching the context available for decision-making.

In a typical modern AI architecture, GraphRAG serves as an intelligent retrieval layer that can be integrated with various components:

- LLM Applications: Providing richer context for more accurate and comprehensive responses

- Agent Systems: Enabling agents to navigate complex knowledge spaces and make better-informed decisions

- Analytics Platforms: Offering relationship-aware data exploration capabilities

- Decision Support Systems: Providing the full context needed for complex decision-making

Performance Considerations

When implementing GraphRAG at scale, performance optimization becomes crucial. Here are key considerations based on real-world implementations:

Hardware Requirements:

- Vector operations benefit significantly from GPU acceleration

- Graph databases need sufficient RAM for caching frequently accessed relationships

- SSD storage is essential for both vector and graph databases at scale

Software Optimizations:

- Use vector database filtering to reduce the graph traversal scope

- Implement caching strategies for frequently accessed graph patterns

- Consider asynchronous processing for complex multi-hop traversals

- Batch operations whenever possible to reduce overhead

Scaling Strategies:

- Horizontal scaling for vector databases is straightforward—add more nodes

- Graph databases can be partitioned by domain or relationship type

- Consider read replicas for high-query-volume scenarios

- Use query routing to balance load between vector and graph operations

| Optimization Technique | Ingestion Speed Improvement | Retrieval Latency Improvement | Response Quality Improvement |

|---|---|---|---|

| Baseline Implementation | 0% | 0% | 0% |

| Enhanced Chunking | +18% | +10% | +25% |

| Batch Processing | +24% | +5% | +8% |

| Relationship Grouping | +15% | +22% | +12% |

| Mix and Batch | +32% | +28% | +15% |

| Combined Optimizations | +38% | +35% | +30% |

Figure 4: GraphRAG Performance Benchmark Results

This table shows the percentage improvement over baseline implementation across three key performance metrics. Enhanced Chunking provides the biggest boost to response quality (25%) due to better context preservation, while Mix and Batch delivers the highest ingestion speed improvement (32%). The Combined Optimizations row demonstrates that multiple techniques work synergistically, achieving the best overall performance across all categories.

Benefits and Challenges

The Advantages of GraphRAG

Let’s talk about what you actually gain by implementing GraphRAG:

1. Deeper Contextual Understanding: By combining semantic similarity with relationship awareness, GraphRAG provides context that’s both broad and deep. You’re not just finding relevant information—you’re understanding how it all fits together.

2. Natural Multi-hop Reasoning: Complex queries that would require multiple rounds of traditional RAG can be handled in a single GraphRAG query. The system naturally follows chains of relationships to find answers.

3. Improved Answer Quality: With richer context comes better answers. LLMs can generate more accurate, nuanced responses when they understand not just what’s relevant but how things connect.

4. Knowledge Discovery: GraphRAG often surfaces unexpected connections that pure semantic search would miss. It’s like having a research assistant who says, “You didn’t ask about this, but it’s connected to your query in an interesting way.”

5. Domain Adaptability: The graph schema can be tailored to any domain, making GraphRAG valuable whether you’re working with medical research, financial data, or technical documentation.

Real-World Challenges

Now, let’s be honest about the challenges—because every technology has them:

Implementation Complexity: Setting up GraphRAG requires expertise in both vector and graph databases. You’re essentially managing two specialized systems plus the orchestration layer. This isn’t a weekend project.

Entity Extraction Quality: The value of your graph depends heavily on the quality of entity and relationship extraction. Poor extraction leads to sparse or inaccurate graphs, limiting the system’s effectiveness.

Performance Tuning: Balancing vector and graph operations for optimal performance requires careful tuning. What works for one query pattern might not work for another.

Cost Considerations: Running both vector and graph databases, plus the compute for entity extraction and embedding generation, adds up. Budget accordingly.

Maintenance Overhead: Keeping the vector and graph representations synchronized as documents update requires careful orchestration and can be operationally complex.

Future Directions in GraphRAG

Agentic GraphRAG

One of the most exciting developments is the emergence of agentic approaches to GraphRAG. Instead of fixed retrieval strategies, these systems use intelligent agents that dynamically decide how to navigate between vector and graph search based on the query.

Imagine agents that can:

- Analyze queries to determine the optimal retrieval strategy

- Dynamically adjust the balance between semantic and relationship search

- Learn from past queries to improve future retrieval patterns

- Collaborate with other agents to handle complex, multi-faceted queries

This isn’t just theoretical—early implementations are showing promise in research labs and cutting-edge production systems.

Integration with Advanced RAG Techniques

The GraphRAG approach is also being combined with other advanced RAG techniques:

Dynamic RAG (DRAG): This approach injects compressed embeddings of retrieved entities directly into the generative model, potentially combining beautifully with GraphRAG’s entity-centric retrieval.

Self-Reflective RAG: Systems that can critique and refine their own retrievals could use GraphRAG’s relationship awareness to identify gaps in retrieved context.

Multimodal GraphRAG: Extending the approach to handle images, audio, and video alongside text, creating truly comprehensive knowledge graphs.

The Broader Implications

As we look to the future, GraphRAG represents more than just a technical advancement—it’s a step toward AI systems that think more like humans do. We don’t just recall isolated facts; we understand how things connect, influence each other, and create emergent patterns.

This has profound implications for:

- Scientific Discovery: AI systems that can identify novel connections between research findings

- Medical Diagnosis: Tools that understand the complex web of symptoms, conditions, and treatments

- Business Intelligence: Platforms that grasp the full context of market dynamics and organizational relationships

- Education: Learning systems that can trace conceptual relationships and dependencies

Conclusion

GraphRAG represents a fundamental shift in how we approach knowledge retrieval for AI systems. By bridging the gap between semantic understanding and relationship awareness, it’s enabling a new generation of AI applications that can navigate complex information landscapes with human-like comprehension.

While implementing GraphRAG comes with its challenges—technical complexity, resource requirements, and the need for high-quality entity extraction—the benefits are compelling. From medical researchers tracing drug interactions to financial analysts understanding market dynamics, GraphRAG is proving its worth in real-world applications.

As we continue to push the boundaries of what’s possible with AI, approaches like GraphRAG that combine multiple knowledge representation strategies will become increasingly important. We’re not just building systems that can find information—we’re building systems that can understand it.

Practical Takeaways

- Start with a clear understanding of your relationship needs—not every application requires the full power of GraphRAG

- Invest in quality entity extraction—it’s the foundation of your knowledge graph

- Design your graph schema thoughtfully—it should reflect the natural relationships in your domain

- Plan for hybrid retrieval from the start—retrofitting graph capabilities onto existing RAG systems is much harder

- Monitor and optimize both vector and graph performance—they have different scaling characteristics and bottlenecks

The future of AI isn’t just about bigger models or better algorithms—it’s about building systems that truly understand the rich, interconnected nature of knowledge. GraphRAG is a crucial step on that journey, and we’re just beginning to explore its full potential.

References

[1] P. Lewis, E. Perez, A. Piktus, F. Petroni, V. Karpukhin, N. Goyal, H. Küttler, M. Lewis, W. Yih, T. Rocktäschel, S. Riedel, and D. Kiela, “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks,” Advances in Neural Information Processing Systems, vol. 33, pp. 9459-9474, 2020.[2] Microsoft Research, “GraphRAG: Unlocking LLM Discovery on Narrative Private Data,” Microsoft Research Blog, https://www.microsoft.com/en-us/research/blog/graphrag-unlocking-llm-discovery-on-narrative-private-data/ (2024).

[3] R. Angles and C. Gutierrez, “Survey of graph database models,” ACM Computing Surveys, vol. 40, no. 1, pp. 1-39, 2008.

[4] I. Robinson, J. Webber, and E. Eifrem, Graph Databases: New Opportunities for Connected Data, 2nd ed. O’Reilly Media, 2015.

[5] Y. A. Malkov and D. A. Yashunin, “Efficient and robust approximate nearest neighbor search using Hierarchical Navigable Small World graphs,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, no. 4, pp. 824-836, 2020.

[6] Qdrant Documentation, “HNSW Index,” https://qdrant.tech/documentation/concepts/indexing/#hnsw-index (2024).

[7] Neo4j Documentation, “Graph Database Concepts,” https://neo4j.com/docs/getting-started/graph-database/ (2024).

[8] J. Devlin, M. Chang, K. Lee, and K. Toutanova, “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding,” Proceedings of NAACL-HLT, pp. 4171-4186, 2019.

[9] M. Yasunaga, A. Bosselut, H. Ren, X. Zhang, C. D. Manning, P. Liang, and J. Leskovec, “Deep Bidirectional Language-Knowledge Graph Pretraining,” Advances in Neural Information Processing Systems, vol. 35, pp. 37309-37323, 2022.

[10] T. Gao, X. Yao, and D. Chen, “SimCSE: Simple Contrastive Learning of Sentence Embeddings,” Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pp. 6894-6910, 2021.

[11] A. Bordes, N. Usunier, A. Garcia-Duran, J. Weston, and O. Yakhnenko, “Translating Embeddings for Modeling Multi-relational Data,” Advances in Neural Information Processing Systems, vol. 26, 2013.

[12] Z. Wang, J. Zhang, J. Feng, and Z. Chen, “Knowledge Graph Embedding by Translating on Hyperplanes,” Proceedings of the AAAI Conference on Artificial Intelligence, vol. 28, no. 1, 2014.

[13] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is All You Need,” Advances in Neural Information Processing Systems, vol. 30, 2017.

[14] S. Liu, F. Yu, L. Zhang, and Y. Liu, “Self-RAG: Learning to Retrieve, Generate, and Critique through Self-Reflection,” arXiv preprint arXiv:2310.11511, 2023.

[15] Y. Gao, Y. Xiong, X. Gao, K. Jia, J. Pan, Y. Bi, Y. Dai, J. Sun, Q. Guo, M. Wang, and H. Wang, “Retrieval-Augmented Generation for Large Language Models: A Survey,” arXiv preprint arXiv:2312.10997, 2024.

[16] FastEmbed Documentation, “Getting Started with FastEmbed,” https://qdrant.github.io/fastembed/ (2024).

[17] LangChain Documentation, “Graphs,” https://python.langchain.com/docs/use_cases/graph/ (2024).

[18] D. Wang, L. Zou, and D. Zhao, “K-BERT: Enabling Language Representation with Knowledge Graph,” Proceedings of AAAI Conference on Artificial Intelligence, vol. 34, pp. 2901-2908, 2020.

[19] J. Zhang, X. Zhang, J. Yu, J. Tang, J. Tang, C. Li, and H. Chen, “Subgraph Retrieval Enhanced Model for Multi-hop Knowledge Base Question Answering,” Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, pp. 5773-5784, 2022.

[20] OpenAI, “GPT-4 Technical Report,” arXiv preprint arXiv:2303.08774, 2023.